TinyML is revolutionizing AI by bringing machine learning directly to microcontrollers and small devices with limited resources. It allows you to run lightweight, efficient models locally, enabling real-time responses and preserving privacy. Unlike traditional ML, Tiny focuses on low power and small size. It’s used for health monitoring, smart homes, and industrial sensors. Curious about how this emerging tech can impact your projects? Keep exploring for more insights.

Key Takeaways

- TinyML enables deploying machine learning models directly on low-power microcontrollers for real-time data processing.

- It uses lightweight, optimized algorithms suitable for devices with limited memory and processing capacity.

- TinyML applications include health monitoring, smart home devices, agriculture sensors, and industrial maintenance.

- The technology faces challenges like resource constraints, power management, and ensuring data security on small devices.

- Advances in hardware and model optimization are driving the growth and expanding TinyML’s role in IoT and embedded AI.

Understanding TinyML and Its Core Components

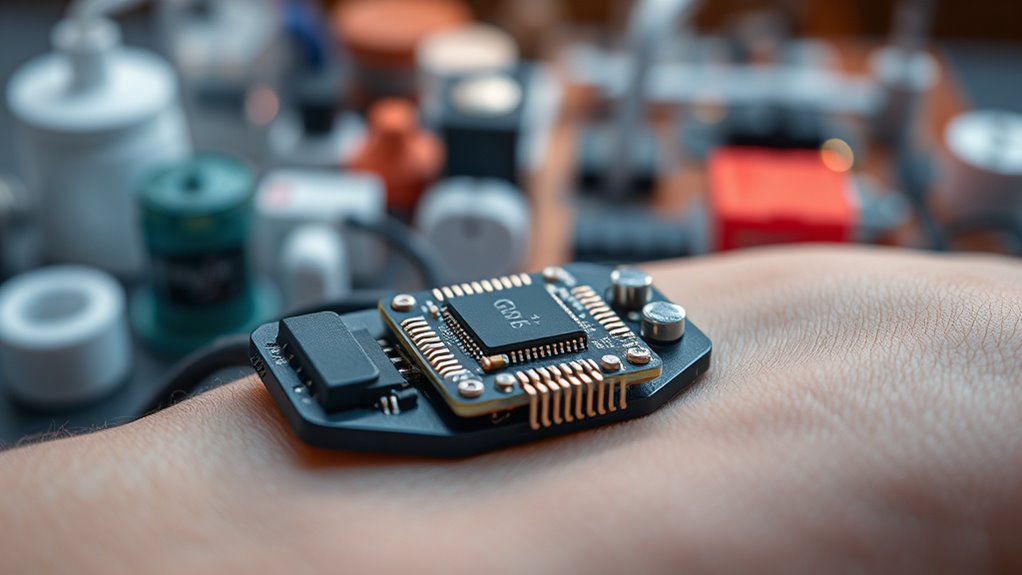

TinyML refers to the deployment of machine learning models directly on small, resource-constrained devices like microcontrollers. These devices have limited memory, processing power, and energy, so Tiny focuses on creating lightweight models that can run efficiently within these constraints. The core components include optimized algorithmscompact neural networks, and efficient data processing techniques. You need models that are small enough to fit into limited storage but still accurate enough for practical tasks. Data collection and preprocessing are essential steps to prepare data for these tiny models. Hardware considerationslike choosing the right microcontroller, also play a vital role. Additionally, understanding bank and finance operations can facilitate the development of applications tailored for financial data processing on TinyML devices. By combining these elements, TinyML enables intelligent functionalities on devices that were previously unable to support complex AI.

How Tinyml Differs From Traditional Machine Learning

While traditional machine learning models are designed to operate on powerful servers and desktops with ample memory and processing capabilities, TinyML focuses on running models directly on small, resource-limited devices like microcontrollers. This shift means TinyML models are smaller, faster, and optimized for low power consumption. They often use simplified algorithms and reduced data precision to fit within tight constraints. Unlike traditional ML, which can process large datasets in the cloud, TinyML performs inference locally, ensuring real-time responses and privacy. Additionally, AI Security concerns related to model robustness and vulnerability mitigation are increasingly relevant in TinyML deployments. Here’s a comparison:

| Aspect | Traditional ML | Tiny |

|---|---|---|

| Hardware requirement | High (servers, desktops) | Low (microcontrollers, embedded) |

| Model Size | Large | Small |

| Power Consumption | Moderate to high | Very low |

| Data Processing | Cloud-based, offline, or batch | On-device, real-time |

| Use Cases | Complex analytics, training | Sensor data, simple tasks |

Key Applications and Use Cases of TinyML

TinyML enables a wide range of practical applications by bringing intelligent processing directly to small, resource-constrained devices. You can use it for real-time health monitoring with wearable sensors that detect irregular heartbeats or abnormal vital signs, providing instant alerts. In smart homes, Tiny powers voice recognition and gesture controls, making devices more responsive and energy-efficient. Agriculture benefits from TinyML through soil sensors that identify pests or monitor moisture levels, optimizing irrigation. Industrial environments leverage TinyML for predictive maintenancespotting equipment failures before they happen. Additionally, security systems utilize tiny models for facial recognition or anomaly detection, enhancing safety without relying on cloud connectivity. These use cases demonstrate how TinyML transforms everyday devices into intelligent, autonomous systems, improving efficiency, safety, and convenience across various industries. Low-power processing enables these devices to operate effectively within strict energy constraints.

Challenges and Considerations in Deploying TinyML

Deploying TinyML on microcontrollers presents several challenges that require careful planning and optimization. Limited resourcessuch as memory and processing power, force you to create highly efficient models that fit within strict constraints. You also need to address power consumptionespecially for battery-powered devices, ensuring your models run smoothly without draining energy. Data quality is another concern; training data must be accurate and representative to prevent poor performance. Additionally, deploying updates or improvements can be complex, as firmware and hardware limitations restrict flexibility. Ensuring security and privacy is essential, especially in sensitive applications. Finally, balancing model complexity and accuracy with resource constraints demands meticulous tuning, making deployment an intricate process that needs thorough testing and validation. Considering the diverse genres of applications and models helps in designing solutions tailored to specific use cases.

The Future of Embedded AI and Emerging Trends

The landscape of embedded AI is rapidly evolving, driven by advances in hardware, algorithms, and data management. You can expect more powerful microcontrollers that handle complex models efficiently while remaining energy-efficient. Emerging trends include federated learningwhich allows devices to learn locally without sharing sensitive data, boosting privacy. Tiny is also becoming integral to IoT applications, enabling real-time insights at the edge. As models shrink further, you’ll see more seamless integration into everyday objects, from wearables to smart home devices. Developments in hardware accelerators will improve processing speeds, making AI on microcontrollers more accessible. Additionally, ongoing innovations in model optimization techniques will further enhance the capability of TinyML systems to run sophisticated tasks on constrained devices. Overall, embedded AI will become smarter, more secure, and more embedded in daily life, transforming industries and empowering your IoT solutions with unprecedented capabilities.

Frequently Asked Questions

How Does Tinyml Impact Device Battery Life and Power Consumption?

TinyML profoundly impacts your device’s battery life and power consumption by enabling AI processing directly on microcontrollers. Since it runs models locally, it reduces the need for constant data transmission to the cloud, which consumes a lot of energy. As a result, your device uses less power, extends battery life, and operates more efficiently. This makes Tiny ideal for low-power, always-on applications like wearables and remote sensors.

What Are the Security Concerns Associated With Tinyml Deployment?

Think of deploying TinyML like building a treehouse—small, yet vulnerable. You might worry about data privacy breaches or malicious attacks exploiting limited security features on microcontrollers. You should also consider risks from unauthorized access, data tampering, and firmware vulnerabilities. To stay safe, you need robust encryption, secure boot processes, and frequent updates, because even tiny targets can be big security threats if left unprotected.

Can Tinyml Models Be Updated or Retrained After Deployment?

You might wonder if tinyML models can be updated or retrained after deployment. Yes, it’s possible, but it depends on the device and the model’s design. Some tinyML systems support over-the-air updates or incremental learning, allowing you to improve performance or adapt to new data. However, limited resources on microcontrollers often mean retraining is less frequent, requiring careful planning for updates to maintain security and efficiency.

How Does Tinyml Handle Real-Time Data Processing Constraints?

Imagine you’re a modern-day wizard, wielding a tiny crystal ball. Tiny handles real-time data by optimizing models for low latency and power efficiency, so you can process data instantly on microcontrollers. It uses lightweight algorithms that adapt to limited resources, ensuring you get quick responses without delays. This way, you can deploy smart solutions in remote or resource-constrained environments while maintaining effective, real-time data processing.

What Industries Are Most Likely to Benefit From Tinyml Innovations?

You’ll find that industries like Healthcare, manufacturingand agriculture benefit greatly from tinyML innovations. In healthcare, it enables real-time patient monitoring and diagnostics. Manufacturing uses it for predictive maintenance and quality control. Agriculture leverages tinyML for precision farming and crop monitoring. These applications improve efficiency, reduce costs, and enhance decision-making. As tinyML advances, expect even broader adoption across various sectors, transforming how you work and live.

Conclusion

As Tiny takes root, it’s like planting a seed that grows into a forest of intelligent devicesquietly transforming your world. With each tiny chip, you become part of a revolution where AI is everywhere, yet unseen. Embrace this budding frontier, where innovation blossoms on microcontrollersand the future whispers promises of smarter, more connected lives. The journey has just begun—dive in and watch the tiny become mighty.